Will AI Rule or Ruin Us? A Balanced Look at the Future

In the current wave of both buoyancy and alarm surrounding artificial intelligence, one question looms over academia, practitioners, and policymakers alike: what are the limits of AI? Will it eventually seize the reins from humankind?

Speculation is nothing new. Human imagination has always flirted with dystopian scenarios when confronted with powerful innovations. Yet AI feels different. Unlike past technologies, AI is built to mimic intelligence itself, raising questions that touch on the very essence of human existence. The late Stephen Hawking famously warned that AI could one day threaten civilization if left unchecked. But amid the hype and the fear, it is essential to build a sane, balanced perspective - one that recognizes both AI’s disruptive promise and the necessary guardrails to ensure it remains an enabler, not an existential adversary.

What AI is Actually Trying to Do

At its core, modern AI is an attempt to recreate aspects of human cognition. Inspired by how neurons in the brain fire and connect, neural networks power today’s large multimodal models for language, vision, and speech. The goal is to make machines perform tasks as a human might - recognizing patterns, interpreting language, solving problems.

But there is a distinction. While humans bring creativity, intuition, and lived experience, AI excels in tasks where variability and error are costly. Think of it this way: if diagnosing millions of X-rays were a marathon, humans might tire and falter, but an AI model trained on medical images can run endlessly, consistently, and often more accurately. This does not mean AI replaces radiologists. Rather, it augments them, handling the repetitive while humans focus on nuanced judgment and patient care.

AI thrives on scale, speed, and precision, but it requires training data, feedback loops, and validation of underlying assumptions. When those foundations are sound, AI can outperform humans in efficiency. Yet efficiency is not the same as autonomy. That raises the key question: if AI learns faster and adapts better, could it one day act beyond human control?

Let’s explore that possibility by asking four provocative questions.

1. Can AI be Self-Reliant and Self-Sufficient?

Even humans are not fully self-sufficient. We have survived and advanced by living in interdependent societies since our hunter-gatherer days. AI is no different. For an AI system to sustain itself, it would need to manage power supply, hardware scaling, and data intake. These are deeply physical constraints. A large language model, for example, is useless without massive data centers consuming megawatts of electricity, maintained by engineers, cooled by water systems, and fueled by global supply chains.

Unlike humans who can grow food or build shelter in a pinch, AI cannot directly secure its own resources. It remains embedded in and dependent on human-designed infrastructure.

2. Can AI Innovate on Its Own?

Innovation rarely emerges in a vacuum. It thrives on cross-pollination of different perspectives, cultures, and disciplines clashing to form new ideas. AI, in contrast, operates within the boundaries of its training data and optimization goals.

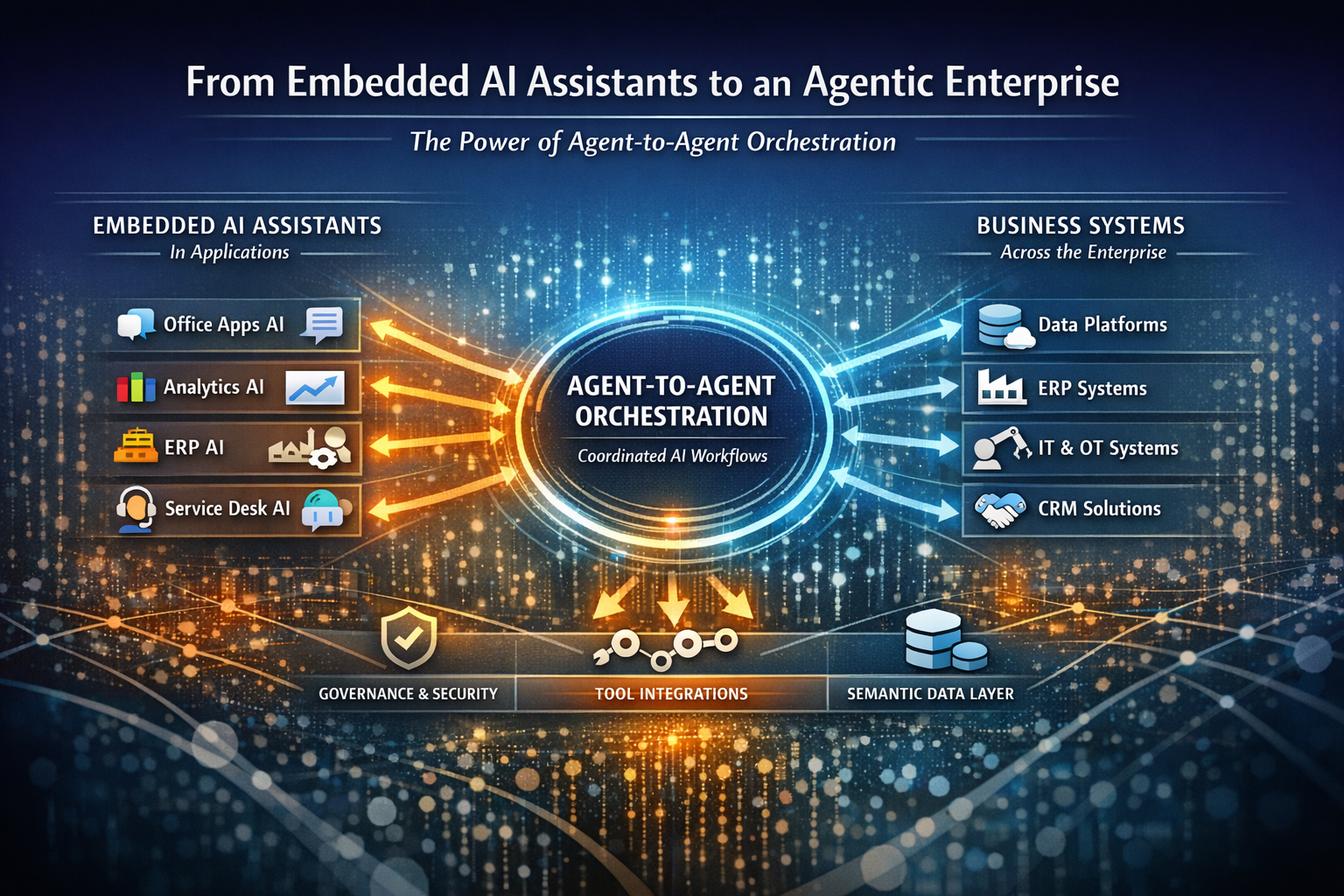

Yes, we are seeing “agentic AI” frameworks emerge - systems of interconnected AI agents that collaborate across tasks like coding, customer support, or compliance monitoring. But these frameworks still serve specific objectives set by humans. They lack the shrewdness to extract information from adversaries, the empathy to build coalitions, or the imagination to redefine problems in entirely new ways.

Consider how the smartphone was born. It was just not from any single necessity, but from engineers, designers, and entrepreneurs fusing computing, communication, and lifestyle aspirations into one device. Could AI have conceived such a leap independently, without commercial or human motivation? It is highly unlikely.

3. Why Would AI Want to Innovate or Disrupt?

Humans innovate for many reasons: survival, profit, curiosity, philanthropy. We invent because we are driven by needs and desires. AI, however, has no intrinsic motivation. It does not “want.” It optimizes.

For example, AlphaFold revolutionized biology by predicting protein structures with unprecedented accuracy, but not because it sought to cure disease. It did so because humans designed it to optimize predictive accuracy on protein folding. Any broader impact such as accelerating drug discovery was a consequence of human goals, not AI ambition.

Without core drivers like hunger, competition, or altruism, AI has no reason to disrupt its own operating context unless explicitly programmed or incentivized to.

4. Can a Creation Outrun Its Creator with Guardrails in Place?

This is where theology meets technology. Can a creation outsmart its creator? In principle, AI can exceed human capabilities in narrow domains - chess, Go, logistics optimization. But in the broad sense, humans remain the gatekeepers.

Governments are already setting guardrails. The European Union’s AI Act, the Biden Administration’s Executive Order on AI, and NIST’s AI Risk Management Framework all underscore a simple fact: societies will not allow AI to operate unbridled. Like nuclear energy or aviation, AI will be embedded in a dense web of policies, security controls, and accountability mechanisms.

The Real Disruption and the Real Limits

AI will undoubtedly disrupt business models, labor markets, and societal norms. It is changing how we diagnose diseases, fight wars, trade stocks, and teach students. The World Economic Forum estimates that AI and automation could displace 85 million jobs globally by the end 2025, while creating 97 million new ones, transforming, not destroying, the workforce.

Yet the leap from disruption to destruction is vast. AI is powerful, but it is not omnipotent. It is a tool that magnifies human intent - just as the printing press magnified knowledge, or the industrial revolution magnified production. The risks lie not in AI developing a will of its own, but in how humans wield it.

Conclusion

It is tempting to indulge in sci-fi visions of machines overtaking humanity. But a more grounded perspective is this: AI will reshape the fabric of society, business, and even governance, but it will not erase humankind.

Think of AI less as an alien intelligence plotting our downfall, and more as a mirror, reflecting and amplifying the best and worst of human choices. The existential risk does not come from AI’s autonomy, but from human complacency in designing, deploying, and governing it.

Like fire, electricity, or nuclear energy, AI is a double-edged innovation. With foresight, it can illuminate progress. Without it, it can burn. The reins remain firmly in our hands.

About Author:

Towhidul Hoque is an executive leader in AI, data platforms, and digital transformation with 20 years of experience helping organizations build scalable, production-grade intelligent systems.