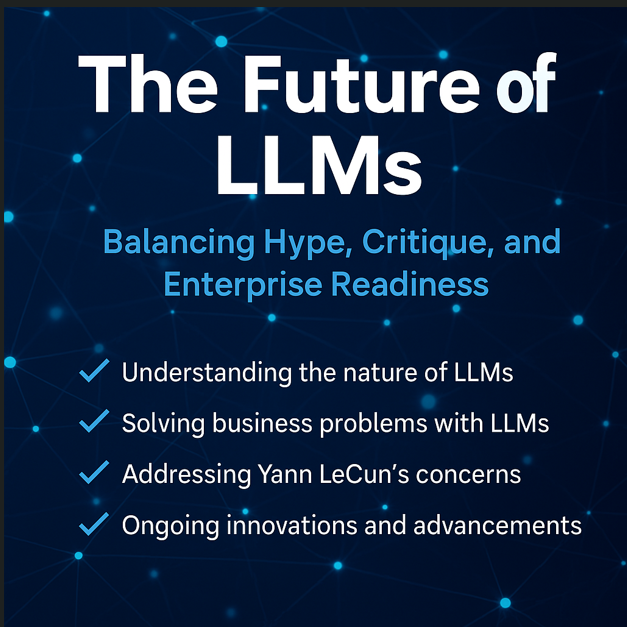

The Future of LLMs: Balancing Hype, Critique, and Enterprise Readiness

Over the past few years, large language models (LLMs) have become the face of generative AI. From ChatGPT to Claude, Gemini, and Llama, the AI landscape has been dominated by models trained on vast corpora of text data that can produce astonishingly coherent and contextually relevant outputs. Yet, as impressive as LLMs are, not everyone in the AI research community is convinced that they are the destination in the pursuit of true artificial intelligence.

Yann LeCun, Meta’s Chief AI Scientist and one of the godfathers of modern deep learning, has voiced strong skepticism about the long-term viability of autoregressive LLMs. His critique underscores a broader debate in the AI research world: What are the limits of LLMs, and how can we build systems that move beyond those boundaries?

Understanding the Nature of LLMs

LLMs are typically autoregressive transformers. They operate by predicting the next token (word or subword) in a sequence, one step at a time. This framework allows them to learn from massive datasets and generalize across a wide array of tasks: text generation, summarization, coding, customer support, legal analysis, and more.

However, LLMs are fundamentally statistical models. They do not “understand” in a human sense. Their outputs are based on patterns found in training data, rather than an internal representation of logic, causality, or the physical world.

Solving Business Problems with LLMs

Despite their theoretical limitations, LLMs are transforming how businesses operate:

- Productivity gains: Tools like GitHub Copilot or Notion AI reduce time spent on mundane or repetitive tasks.

- Customer service: Chatbots powered by LLMs are handling tier-1 support, freeing human agents for complex issues.

- Market insights: LLMs can process earnings transcripts, social media data, and news articles to generate financial signals.

- Internal knowledge management: Custom GPT-like agents are helping employees navigate enterprise data efficiently.

According to McKinsey & Company, generative AI could contribute between $2.6 trillion to $4.4 trillion annually to the global economy. Industries like banking, life sciences, and software are expected to see the largest gains.

LeCun’s Concerns: Valid but Not a Requiem

Yann LeCun has laid out a detailed critique of LLMs:

- No persistent memory: LLMs do not remember past sessions unless explicitly designed to (e.g., using vector databases).

- No planning or reasoning: LLMs excel in pattern recognition but falter in reasoning across multiple steps or executing structured plans.

- Not grounded in the physical world: LLMs are blind and deaf - they learn from text, not from interaction with their environment.

Instead, LeCun champions Joint Embedding Predictive Architectures (JEPA), which focus on predicting high-level representations rather than surface-level tokens. These architectures aim to simulate aspects of how humans abstractly reason, remember, and perceive.

“LLMs will be obsolete in five years.”

—

Yann LeCun, Chief AI Scientist, Meta

Beyond LLMs: The Full Stack of Business Problems

While LLMs are powerful, most business problems extend far beyond language tasks. They require a mix of:

- Structured data: Sales, inventory, transactional logs

- Time series analysis: Forecasting, anomaly detection

- Optimization: Supply chain planning, logistics

- Causal inference: Understanding what drives outcomes, not just correlations

In fact, roughly 70 to 80 percent of business problems today are still best addressed using traditional machine learning and statistical methods. About 15 to 25 percent are well-suited for LLM-powered solutions, primarily in language-centric areas. An additional 10 to 20 percent of challenges remain unoptimized due to integration, scalability, or change management barriers.

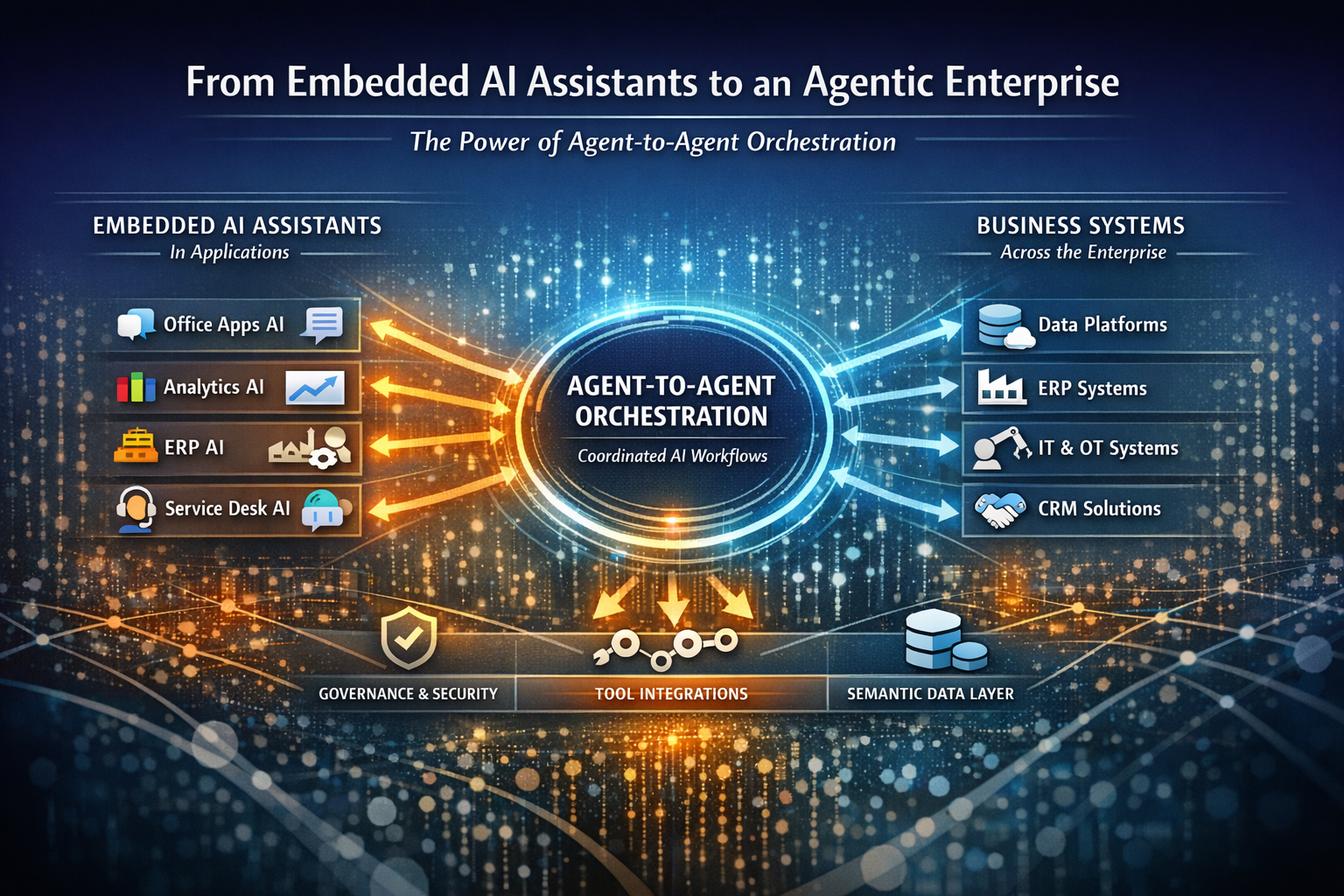

In most enterprise use cases, LLMs act as the interface layer or a supporting module - not the core intelligence. They must integrate with data pipelines, APIs, knowledge graphs, and existing analytics infrastructure to provide maximum value.

Addressing LeCun’s Concerns: Ongoing Innovations

Recognizing that LLMs serve best as an interface layer over traditional ML and statistical systems, researchers are tackling LeCun’s criticisms head-on by enhancing these models with memory, reasoning, and planning:

- Tool use: GPT-4 and similar models now invoke calculators, code tools, and external APIs to improve factuality.

- Retrieval-Augmented Generation (RAG): Combines LLMs with real-time, query-specific data via vector databases.

- ReAct framework: Enables models to reason and act in multiple intermediate steps with feedback loops.

- Multimodal learning: Integrates vision, audio, and text inputs to build models grounded in sensory reality (e.g., Google Gemini, Claude 3).

- Agentic LLMs: Frameworks like Auto-GPT and LangGraph enable autonomous task execution, long-term memory, and planning.

Pioneering labs like DeepMind, Anthropic, and OpenAI are actively building models that address these gaps—whether through memory enhancements, agent frameworks, or multimodal reasoning.

Conclusion: Evolving, Not Replacing

LeCun’s critique is not a death sentence for LLMs - it is a challenge to evolve.

LLMs are not the destination but a milestone in the broader journey toward intelligent systems. They have proven their utility across industries, inspired breakthroughs in interface design, and catalyzed a wave of enterprise experimentation.

The future of AI is hybrid—blending symbolic logic, neural embeddings, memory networks, causal inference, and real-world grounding. Whether JEPA or some newer architecture prevails, one lesson remains clear: language is a powerful interface, but intelligence is more than language.

To stay competitive, enterprises must treat LLMs not as magic wands, but as composable, improvable components in a broader AI ecosystem. The question is not whether LLMs will be obsolete, but how we evolve with them.

About Author:

Towhidul Hoque is an executive leader in AI, data platforms, and digital transformation with 20 years of experience helping organizations build scalable, production-grade intelligent systems.