From RPA to Agentic AI: How Automation Grew Up and What It Means for Your Business

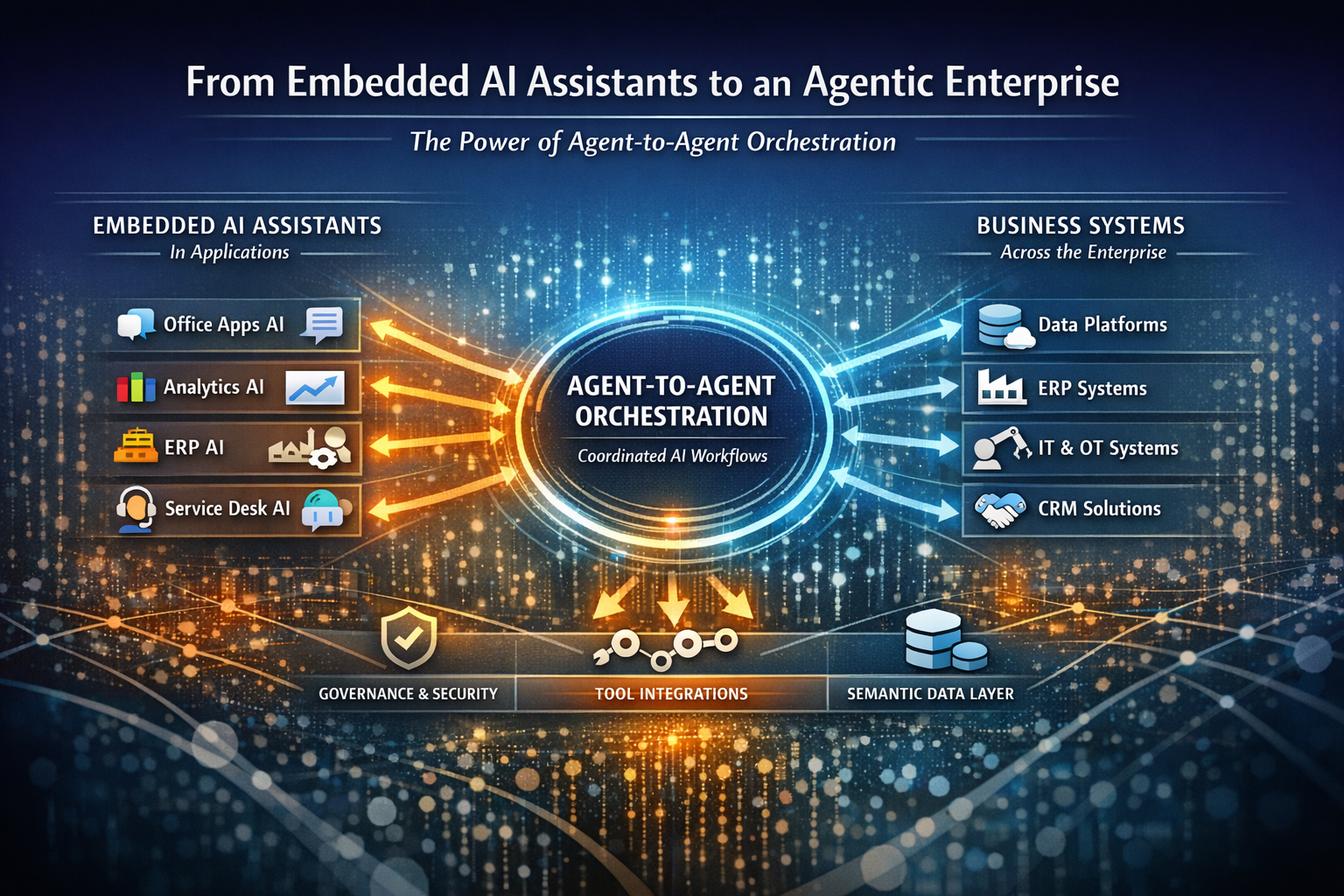

RPA made deterministic and rules-based tasks dependable at scale. The next wave, Agentic AI, introduces goal-seeking systems that plan multi-step work, call tools and APIs (including your RPA bots), collaborate with humans and other agents, and learn from feedback. The jump is powered by foundation models, orchestration frameworks (e.g., LangGraph, AutoGen), and Model Context Protocol (MCP) for safe, standardized access to enterprise tools and knowledge. With the right process intelligence and governance (NIST AI RMF, ISO/IEC 42001), organizations can move from automating steps to delivering outcomes with traceability.

How we got here: RPA ➜ Intelligent Automation ➜ Agentic AI

RPA era of 2015–2020: RPA began by automating deterministic, rules-based tasks, mimicking user actions across UIs and APIs. It went mainstream as enterprises sought gains in cost efficiency, accuracy, and compliance.

Intelligent Automation or Hyperautomation era of 2019–2023: RPA was combined with OCR, IDP, NLP, ML, and process mining to orchestrate workflows instead of just discrete steps. Centers of Excellence, bot orchestrators, and BPM tools matured the operating model.

Agentic AI era from 2024: Systems now pursue goals, plan, reason, and use tools, including APIs, databases, and RPA bots, while collaborating with humans and other agents. New frameworks (AutoGen, LangGraph) and standards (MCP) have made this practical, governable, and ready for production use.

Why this matters now: With GenAI, the share of work activities with technical automation potential has jumped significantly (from roughly 50% in 2017 to an estimated 60–70% today). The scope of automation is now broader and far more knowledge-work-heavy than just a few years ago.

RPA vs. Agentic AI: Similarities and Differences

| Dimension | RPA | Agentic AI |

|---|---|---|

| Core capability | Deterministic task automation via rules and UI and API scripting | Goal-driven planning, tool use, multi-agent collaboration |

| Data | Mostly structured, predictable | Structured + unstructured + multimodal (text, docs, logs, images) |

| Change tolerance | Brittle with UI changes; needs maintenance | More robust via planning, retrieval, retries; still needs guardrails |

| Orchestration | RPA orchestrators; BPM | Agent graphs (LangGraph), multi-agent frameworks (AutoGen), MCP connectors |

| Typical scope | Task and step automation | End-to-end outcomes (e.g., “close claim,” “prepare bid,” “resolve incident”) |

| Governance | Ops change controls | Responsible AI controls (NIST AI RMF, ISO/IEC 42001), evaluation pipelines, audit logs |

| Best together | Reliable actuators | Agents call RPA and APIs as tools inside plans |

What fueled the leap

The leap was fueled by five forces:

- Foundation models plus agent orchestration: LLMs provided broad language and knowledge capabilities, while frameworks like LangGraph enabled stateful, controllable agents with retries, memory, and human-in-the-loop checkpoints. AutoGen added support for multi-agent collaboration, which allows systems to divide and conquer complex work.

- Access via standardized protocol: The Model Context Protocol (MCP) made it possible for applications to expose tools and knowledge safely, cutting down on one-off integrations and improving governance and control.

- Economies of scale in infrastructure: Faster, cheaper inference and maturing infrastructure have made always-on agents both economical and observable.

- Process intelligence was ready: Mature process mining and event logging created the “maps” that let agents navigate and execute full end-to-end journeys, not just isolated tasks.

- Governance got real: Standards like NIST’s AI RMF and ISO/IEC 42001 introduced shared frameworks for risk management, transparency, human oversight, evaluation, and incident response.

Impact across the industries

Across industries, the shift to agentic AI is moving organizations from task automation to outcome delivery, spanning automation, orchestration, reasoning, decisioning, autonomy, and value. In financial services, agents read and reason over contracts, triage exceptions, and orchestrate remediation across core systems, boosting straight-through outcomes while preserving auditability. Insurers are evolving beyond FNOL scripts to multi-agent flows that coordinate subrogation, vendor scheduling, fraud checks, and coverage interpretation, with human approvals only where risk is high. In healthcare revenue cycle, agents unify IDP, payer rules, and EHR or clearinghouse workflows to cut turnaround, reduce denials, and document every decision. Manufacturers pair predictive insights with autonomous “ops dispatcher” agents that plan work orders, parts, crews, and permits end-to-end, improving uptime and labor efficiency. In the public sector and other regulated environments, governed agents bring traceability, permissions, and policy alignment, accelerating service delivery without sacrificing compliance. The net effect: higher throughput, lower cost-to-serve, shorter cycle times, and fewer errors with clearer, defensible value.

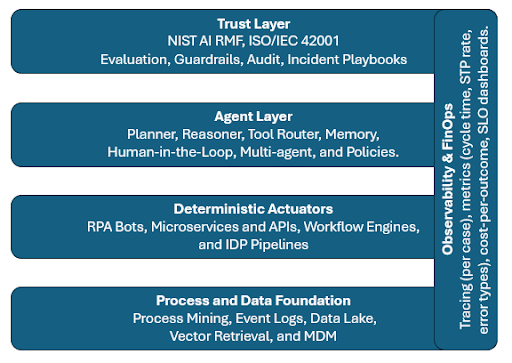

Reference architecture for agentic automation stack

Think of the Agentic Automation Stack as a well-run enterprise in miniature. At its foundation lies the process and data layer, the single source of truth and the place where work is discovered. This layer captures operational footprints: process-mining maps of the happy path and messy exceptions, event logs from core systems, and a curated data lake or warehouse holding golden records. Unstructured knowledge isn’t an afterthought. Vector retrieval and document pipelines extract meaning from contracts, emails, and PDFs so agents can reason over them. Together, these elements form a living map of how value moves through the business, supported by clean, governed data services, SQL for structured facts and retrieval APIs for contextual insights, that everything above will rely on.

Figure: Reference Architecture for Agentic Automation

Above the foundation are the deterministic actuators, the dependable “muscle” of the stack. These include your RPA bots, microservices, APIs, workflow engines, and IDP tasks, each documented in a service catalog with SLAs, timeouts, idempotency rules, and clear error semantics. Actions are exposed through an API gateway or via the Model Context Protocol (MCP). Secrets are secured in a vault, with role-based access controls. When an agent needs to post a journal entry, issue a purchase order, or push a claim forward, these executors make it happen predictably, repeatably, and with full auditability.

At the center is the

agent layer, the stack’s working brain. A planner and reasoner (e.g., built with LangGraph) turns goals into plans, routes to the right tools, remembers what just happened, and knows when to ask for help. Specialist agents such contract parser, claims triage, maintenance dispatcher take on domain work, while multi-agent coordination (e.g., AutoGen) handles negotiation and edge cases. The graph explicitly demonstrates: Plan → Select Tools → Act → Observe → Learn, with human-in-the-loop checkpoints where risk or ambiguity is high. Tools are permissioned by role, cost-capped, and observable. Evaluation hooks score each step so the system can fall back, escalate, or learn.

Presiding over everything is the trust layer, the organization’s constitution. Here, NIST AI RMF and ISO/IEC 42001 expectations are encoded as policy-as-code. Continuous evaluations check for quality, safety, and bias. Lineage is maintained for prompts, agents, models, and tools, with artifacts versioned and cryptographically signed. Incident playbooks define how to detect, contain, roll back, and document events. This is not bureaucracy for its own sake. It is how you ship fast and still pass audit with evidence in hand.

Running vertically through the stack is

observability and FinOps, the nervous system and P&L view combined. Every step is traced from the user’s click to the bot’s action. Dashboards surface cycle time, straight-through processing rates, error types, and, most importantly, cost per outcome. Token and tool usage are metered while evaluation scores ride alongside traces, so leaders can see quality and cost trending in the right direction.

A few design instincts keep teams aligned. Start with the outcome, not the model: name the KPI you will move and fit agents, tools, and guardrails to that goal. Put humans in the loop where risk is real and make override and approval part of the graph, not a side channel. Treat governance as a product feature - evaluation, audit, and incident response should be as tangible as APIs. Build for composability and portability by exposing tools via MCP and avoiding a single vendor embrace. As always, measure relentlessly. If cost per outcome is not falling while quality rises, the design is not optimal.

A pragmatic 90-day blueprint:

Here is a simple way to turn 90 days into real results without boiling the ocean.

Weeks 1–2: Choose the outcome.

Resist the urge to automate everything. Pick one journey you can own end-to-end such as closing a claim, paying an invoice, or resolving an incident. Write a one-page outcome charter that names the owner, the system of record, and the three numbers that matter: cycle time, straight-through-processing (STP) rate, and cost per case. These become the scoreboard for the next 90 days.

Weeks 3–4: See the work and de-risk it.

Shine a light on how the work actually flows. Use process mining to map the happy path and the few exceptions that cause most delays. In parallel, run a short AI risk and impact review aligned to NIST AI RMF and ISO/IEC 42001: what could go wrong, what needs human approval, and what evidence auditors will expect. The output is a “guardrails + exceptions” sheet that defines where agents must ask before acting.

Weeks 5–8: Build the first agentic slice.

Stand up a planner and agent (e.g., with LangGraph) and wire it to your tools through MCP - RPA bots for deterministic steps, APIs and workflow engines for system actions, IDP for documents, and ticketing for handoffs. Keep it narrow but complete: the agent should plan the work, select the right tools, act, observe the result, and learn. Insert a human-in-the-loop checkpoint exactly where risk or ambiguity is highest and log every decision with enough context to explain it later.

Weeks 9–12: Harden, scale, and prove value.

Teach the system to handle the real world. Add multi-agent patterns for negotiation and exception handling, plus evaluation harnesses, guardrails, and a tamper-proof audit trail. Turn on observability so leaders can see time saved, errors avoided, dollars recovered, and the cost per outcome trending down. When the slice is stable, clone the pattern to the next adjacent journey.

What good looks like at Day 90 is having a working, auditable agent that moves a real KPI in production, a playbook your teams can repeat, and a dashboard that tells you why this should be scaled in dollars and minutes.

RoI you can defend

- Labor & cycle time: Agentic workflows pull hours out of queues and handoffs, cutting turnaround from days to hours while keeping ~99%+ accuracy on mature tasks. Show baseline → current → run-rate hours and spend avoided.

- Quality & risk: Agents log every step and rationale. Combined with NIST and ISO-aligned controls, audits become repeatable and incidents procedural.

- Throughput & uptime: In asset-heavy operations, predictive and agent-assisted maintenance typically delivers 30–50% downtime reduction and 20–40% asset-life gains.

Ultimately, report value in dollars, time saved, errors avoided, and capacity unlocked against cost per outcome (inference + tool calls + ops). Scale when cost per outcome trends down and quality trends up.

What to watch in 2025–2026

- Convergence: RPA, IDP, process mining, and agent frameworks unify under a single control plane for agentic automation.

- Open context standards: MCP adoption expands as the common way to wire tools and knowledge into agents, improving safety and portability.

- Cost curves: Continued inference price declines make always-on agents more attractive; architect for portability across model providers.

How DX Advisory Solutions can help

- Discovery & prioritization workshop (2 weeks): Identify 1–2 journeys with near-term ROI and align success metrics, risks, and stakeholders.

- Pilot (6–8 weeks): Build the first agentic slice with your data, tools, and guardrails and integrate with existing RPA and APIs.

- Scale-up (ongoing): Expand to adjacent journeys, mature governance (NIST and ISO), and establish internal capability (training + playbooks).

Call to action

Ready to move from automating steps to delivering outcomes? Book a 30-minute advisory session with DXAS to plan your agentic automation roadmap.

Acknowledgments

- McKinsey: The economic potential of generative AI (automation potential and industry impact).

- NIST AI Risk Management Framework (RMF).

- ISO/IEC 42001: AI Management System standard.

About Author:

Towhidul Hoque is an executive leader in AI, data platforms, and digital transformation with 20 years of experience helping organizations build scalable, production-grade intelligent systems.