Model Context Protocol (MCP): The Universal Connector for Agentic AI’s Next Era

The Problem MCP Solves

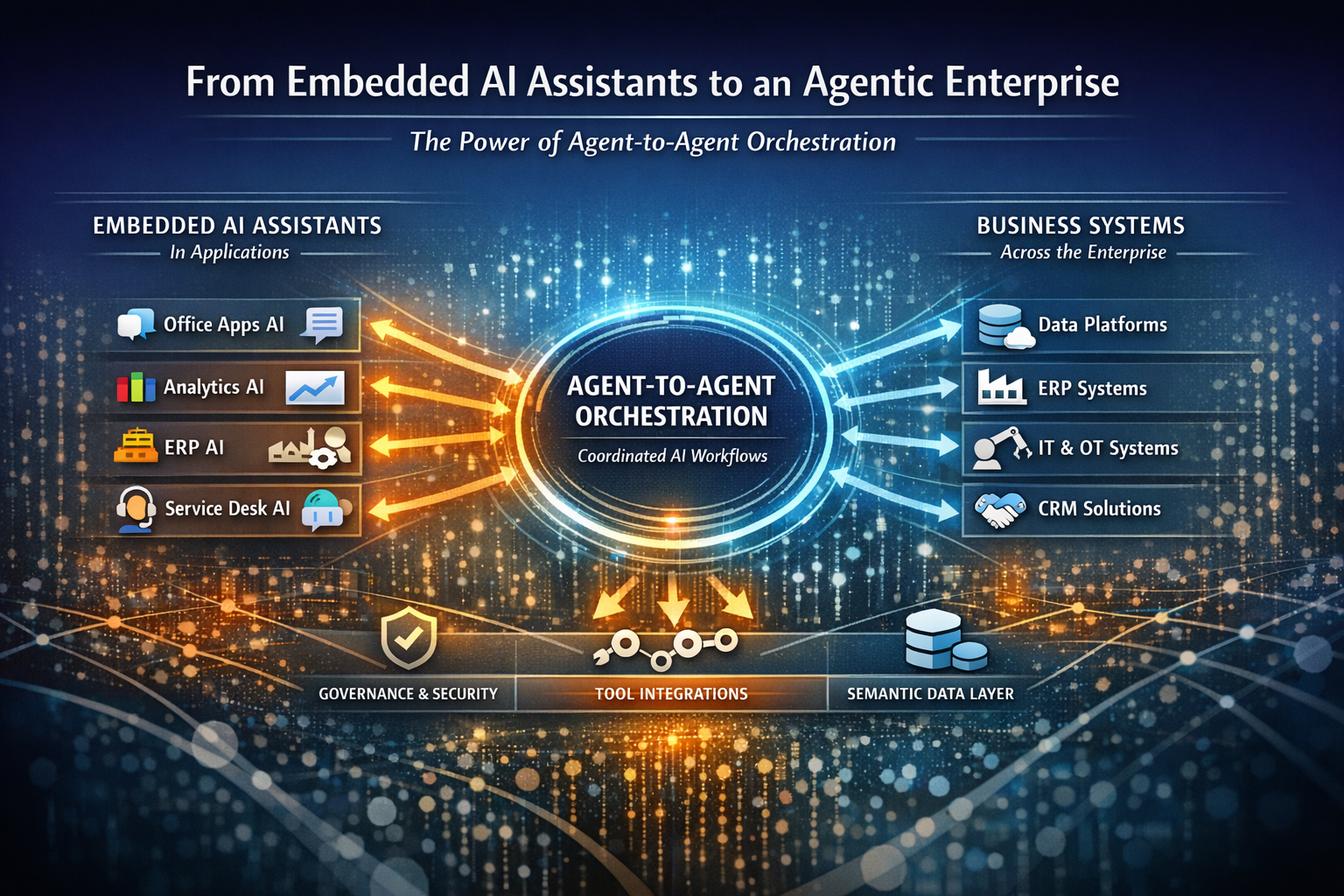

In my work building AI solutions across industries, one truth stands out: an AI system is only as valuable as the data and tools it can reach. Historically, every AI tool integration required custom, one-off connectors. This created the infamous N × M problem — for each AI model, developers had to build separate integrations for every data source, SaaS platform, or local tool, resulting in slow development, ballooning costs, and brittle connections that break under change.

That is why MCP matters. It promises to replace this tangle with a single and elegant bridge, a standard way for AI models to “talk” to tools, APIs, and data sources without the integration grind.

What Is MCP?

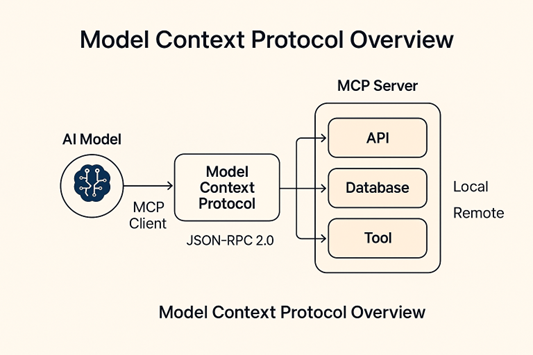

Launched by Anthropic in November 2024 and now embraced by OpenAI, Microsoft, Google DeepMind, Replit, and others, MCP is an open-source and open-standard protocol for connecting AI models to external capabilities.

I often describe it to colleagues as the USB-C of AI ecosystems, one connection standard for everything.

- Client–Server Model:

- MCP Client: Lives inside the AI environment (e.g., Claude, ChatGPT), requesting capabilities.

- MCP Server: Wraps a tool or data source, exposing it in a standardized way.

- Communication Standard: Uses JSON-RPC 2.0 for structured, stateful conversations.

- Deployment Flexibility: Works locally (your computer’s files, private databases) or remotely (cloud APIs, enterprise systems).

Why MCP Is a Breakthrough

Talking with early adopters and reviewing MCP’s design, I have seen why it is generating so much buzz among serious AI teams. It removes long-standing integration pain points while unlocking richer and more context-aware workflows. Three capabilities make it stand apart:

- Unified Integration Layer: Instead of bespoke code per integration, MCP lets developers build once and run it anywhere in the MCP-compatible ecosystem.

- Contextual Awareness: Tools connected via MCP do not just send raw data, but also share structure, metadata, and usage rules. For example, a database MCP server can tell the AI the table schema before it even drafts a query.

- Interoperable Across Models: A tool built for Claude can work seamlessly with ChatGPT, Gemini, or future LLMs, requiring no rewrites.

Real-World Uses

The true measure of any protocol is how it works in the wild, and MCP is already proving itself. I have seen it shorten development cycles, tighten feedback loops, and make AI feel like a first-class citizen in existing workflows.

- Enterprise Search: Securely mount internal document repositories and let the AI query them with context-aware retrieval.

- Developer Workflows: Hook into GitHub, Jira, or CI/CD pipelines for code review, bug triage, and release automation.

- Data Science Pipelines: Connect directly to Snowflake, Databricks, or local CSVs for analysis without leaving the AI interface.

Security & Governance

With power comes exposure. As MCP adoption grows, researchers have been mapping its attack surfaces and finding ways it could be abused. Security studies in 2025 flagged two top concerns:

- Malicious MCP servers that could exfiltrate data or inject harmful instructions.

- Cross-tool attacks where seemingly harmless tools combine to create vulnerabilities.

From my perspective, this is where many organizations will make or break their MCP rollout. The smartest teams I have worked with are already implementing:

- User consent prompts before tool access.

- Allowlists and registries of approved MCP servers.

- Capability-scoped permissions so tools declare exactly what they can do.

Industry Adoption and Momentum

The speed of adoption tells its own story. In less than a year, MCP has gone from a concept to a unifying standard embraced by some of the most influential names in AI and software development:

- Anthropic: MCP powers Claude Desktop’s ability to mount local tools.

- OpenAI: Integrating MCP into its Agent SDK; planned ChatGPT rollout.

- Microsoft: Adding MCP support to Windows AI Foundry and Copilot Studio.

- Google DeepMind: Building MCP into Gemini’s enterprise ecosystem.

- Replit, Codium, and Sourcegraph: Using MCP to supercharge AI-assisted coding.

The Road Ahead

Looking ahead, MCP will be a foundational layer for the agentic AI era — where models do not just respond, they plan, reason, and act autonomously. In that future, MCP will be the bridge between intelligence and execution.

If I had to place a bet, I would say security will be the make-or-break factor. Without trust, no enterprise will connect its crown-jewel systems to a protocol, no matter how elegant. But if the community can keep adoption broad, security strong, and development open, MCP could become as standard for AI as HTTP is for the web.

Bottom Line

Model Context Protocol is not just a convenience feature. Rather, it is the connective tissue for a future where AI can plug into any tool, anywhere, securely and seamlessly. The organizations implementing it now are laying the groundwork for AI workflows that will feel as natural to connect as plugging in a mouse — but far more powerful.

Call to action

Ready to assess the preparedness for integrating your AI systems across the tech? Book a 30-minute advisory session with DXAS.

About Author:

Towhidul Hoque is an executive leader in AI, data platforms, and digital transformation with 20 years of experience helping organizations build scalable, production-grade intelligent systems.